History of AI

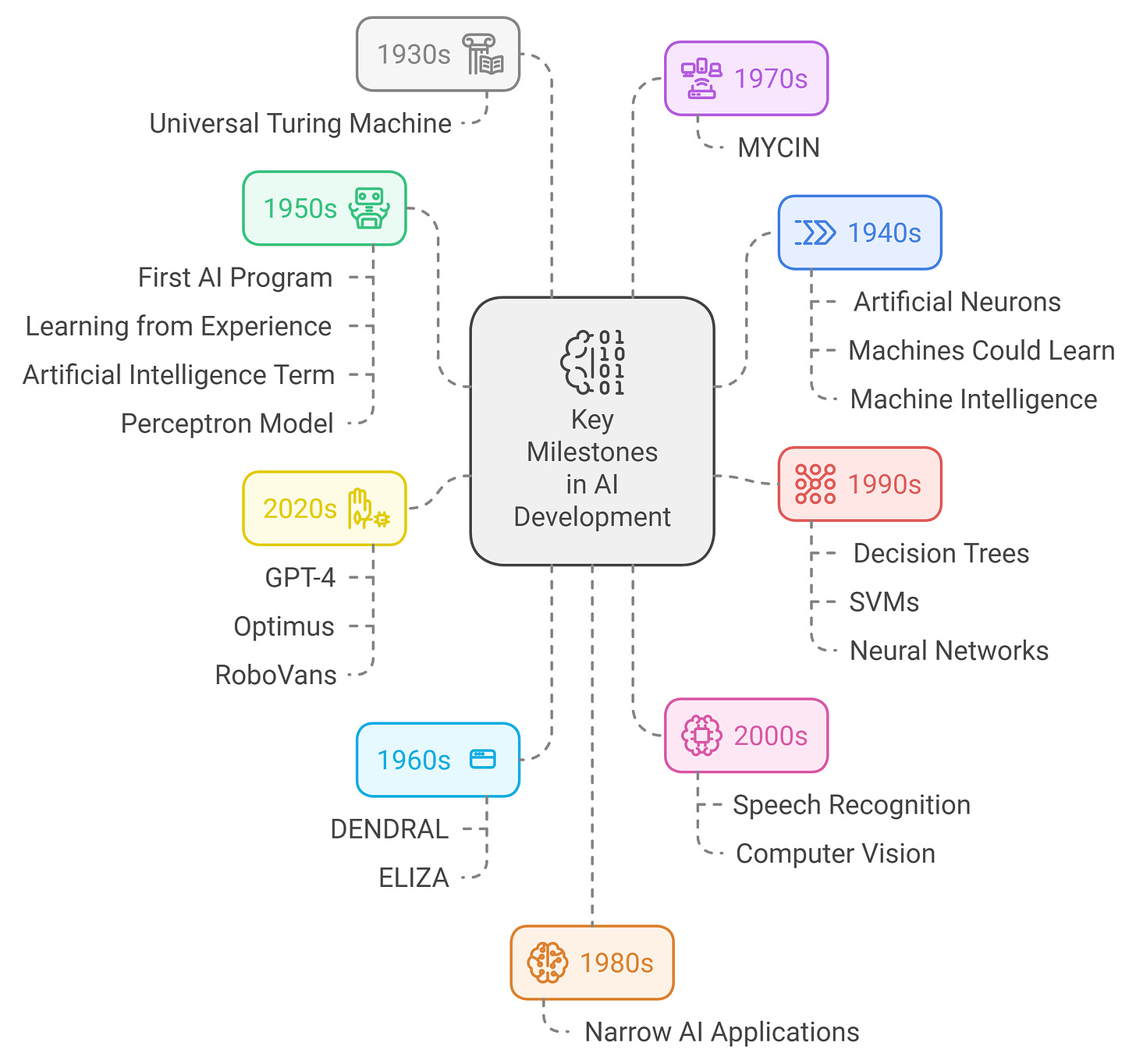

Recently, OpenAI launched its new ChatGPT models, and Elon Musk introduced Optimus and RoboVans. Major organizations like Meta, Google, and Microsoft are also heavily involved in AI development.

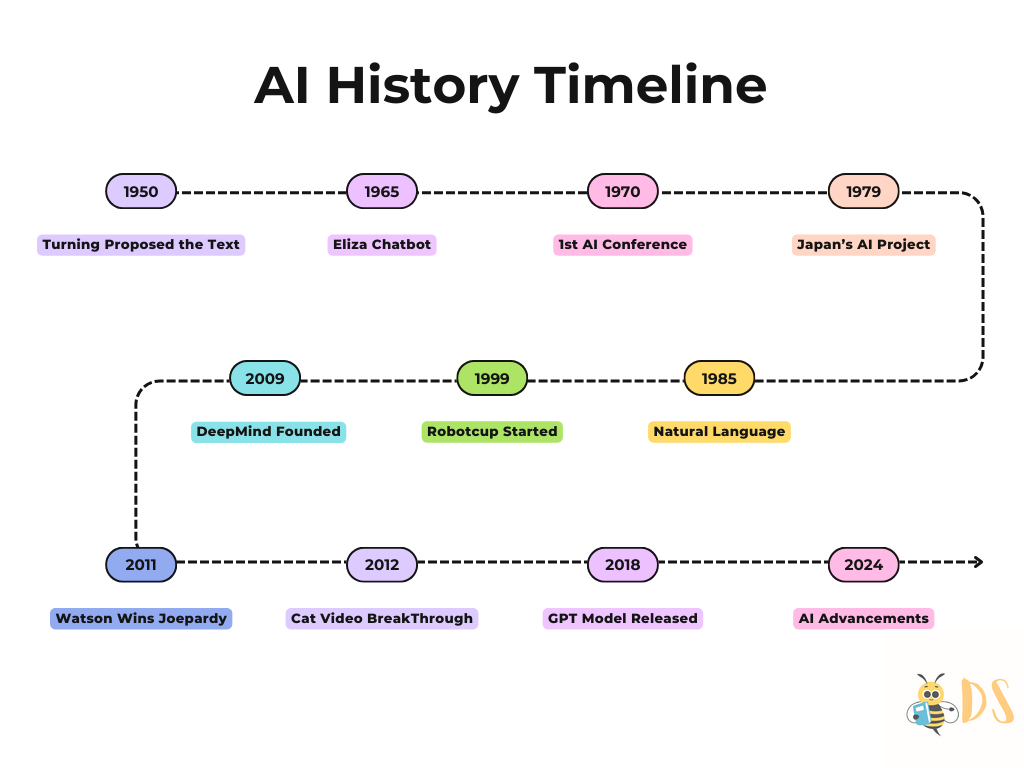

In this article, we’ll explore the amazing history of Artificial Intelligence, tracing its roots and the key milestones that have led to the advancements we see today.

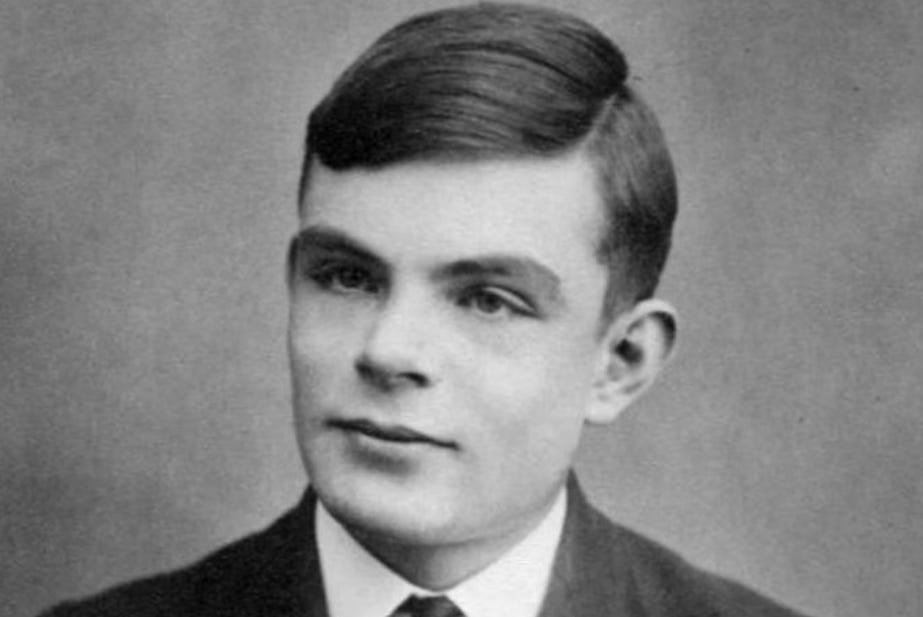

The Birth of AI: Alan Turing's Vision

Alan Turing's Early Contributions

Alan Turing, a British mathematician and computer scientist, is often regarded as the father of AI. In 1935, Turing proposed the concept of an abstract computing machine, which we now call the "universal Turing machine." This theoretical machine had the potential to perform any computational task by reading and writing symbols on a tape, based on instructions. The universal Turing machine laid the foundation for all modern computers.

During World War II, Turing worked as a cryptanalyst at Bletchley Park, contributing to breaking German military codes. It was during this period that Turing started thinking about machine intelligence, speculating that computers could one day learn from experience and solve problems based on guiding principles. This concept, now known as heuristic problem-solving, is central to AI.

Source: https://www.independent.co.uk/life-style/alan-turing-new-ps50-banknote-gay-codebreaker-mathematician-sexuality-pardon-a9005086.html

Turing's Machine Intelligence and the Turing Test

In 1947, Turing took his ideas further by suggesting that machines could learn from experience and modify their own instructions. He elaborated on these ideas in his 1948 report titled "Intelligent Machinery," which introduced several key concepts of AI, including training artificial neural networks for specific tasks.

One of Turing's favorite examples of machine intelligence was chess. He believed that a computer could play chess by searching all possible moves, using heuristics to narrow down options. Turing designed chess-playing programs, but he couldn't test them due to the lack of a computer at the time.

In 1950, Turing introduced the famous Turing Test as a way to determine whether a machine could exhibit intelligent behavior indistinguishable from that of a human. The test involves a computer, a human interrogator, and a human foil. If the interrogator cannot reliably distinguish the computer from the human based on their responses, the machine is considered intelligent.

Although no AI system has fully passed the Turing Test, the development of large language models like ChatGPT has reignited discussions about AI’s capabilities.

Early Milestones in AI Development

The First AI Programs

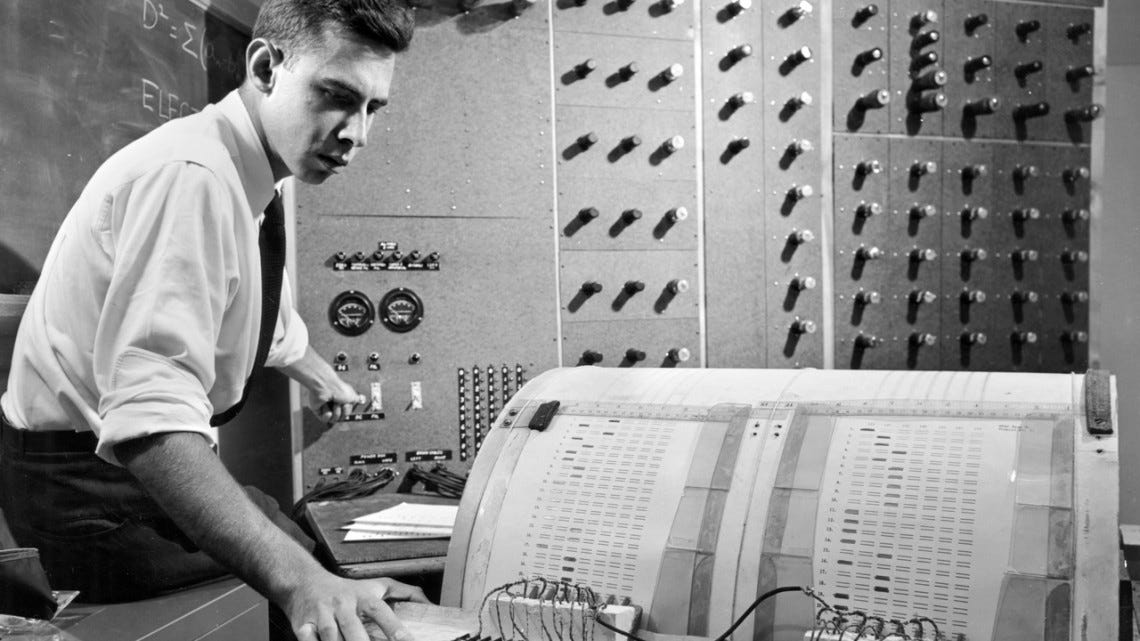

The first successful AI programs were developed in the early 1950s. One notable example is a checkers program created by Christopher Strachey at the University of Manchester in 1951. By 1952, this program could play a complete game of checkers.

Source: https://medium.com/ibm-data-ai/the-first-of-its-kind-ai-model-samuels-checkers-playing-program-1b712fa4ab96

Around the same time, Anthony Oettinger developed "Shopper," an AI system at the University of Cambridge that could search for items in a simulated mall. These early programs marked the beginning of machine learning, where systems learned from past experiences to improve their performance.

In the U.S., Arthur Samuel wrote a checkers program for the IBM 701, which was enhanced over time to learn from experience. By 1962, Samuel's program had advanced enough to win a game against a checkers champion. His work introduced evolutionary computing, where multiple versions of a program compete, and the best-performing version becomes the standard.

Neural Networks and Logical Reasoning in AI

John Holland, a pioneer in neural networks, developed a virtual rat for the IBM 701 in 1957, showcasing the potential of neural networks for problem-solving tasks. He laid the foundation for genetic algorithms, which would later be used for practical applications like generating crime suspect portraits.

Source: https://www.timetoast.com/timelines/the-history-of-ai-c859c4b5-f2e6-4a51-b8e0-4cd422ef4598

Meanwhile, researchers focused on logical reasoning in AI. In 1955-56, Allen Newell, J. Clifford Shaw, and Herbert Simon developed the Logic Theorist, a program that could prove mathematical theorems, even devising more efficient proofs than the original authors of Principia Mathematica. This work led to the creation of the General Problem Solver (GPS), a system capable of solving puzzles through trial and error.

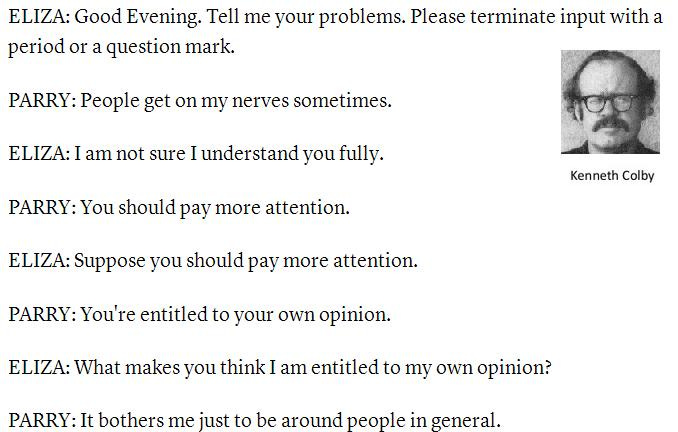

Eliza and Parry: Early AI Conversations

In the 1960s, Joseph Weizenbaum created Eliza, a program that mimicked a human therapist in conversations. Shortly after, Kenneth Colby developed Parry, a program designed to simulate a paranoid patient.

Source: https://www.scaruffi.com/mind/ai1970.html

Both systems demonstrated that machines could hold seemingly intelligent conversations, though their responses were pre-programmed rather than genuinely intelligent.

The Rise of AI Programming Languages

To facilitate AI research, John McCarthy, a prominent AI researcher, developed the programming language LISP in 1960. LISP was designed for symbolic AI and became the dominant programming language for AI work for many decades.

Later, in the 1970s, PROLOG was developed as another AI programming language, using a powerful theorem-proving technique for knowledge-based systems.

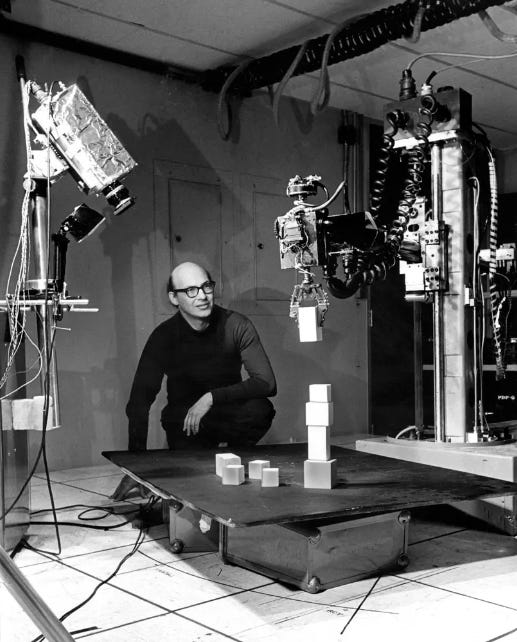

Microworlds: A Simplified Approach to AI

In the 1970s, Marvin Minsky and Seymour Papert introduced the idea of microworld simplified environments where AI research could be tested. One famous microworld was SHRDLU, a program developed by Terry Winograd at MIT, which controlled a virtual robot arm to manipulate blocks based on natural language commands.

Source: https://www.nytimes.com/2016/01/26/business/marvin-minsky-pioneer-in-artificial-intelligence-dies-at-88.html

Another microworld success was Shakey, a mobile robot developed at Stanford Research Institute. Shakey could navigate a simplified environment and perform tasks like pushing objects. However, despite these early successes, microworlds faced limitations when applied to more complex real-world problems.

Expert Systems: Narrow AI in Action

The Rise of Expert Systems

In the 1970s and 1980s, AI saw the development of expert systems programs designed to mimic human experts in narrow domains like medical diagnosis or financial management. These systems often outperformed human experts in specific fields due to their comprehensive knowledge base.

DENDRAL and MYCIN: Early Expert Systems

One of the earliest expert systems was DENDRAL, developed in 1965 by Edward Feigenbaum and Joshua Lederberg at Stanford University. DENDRAL analyzed chemical compounds’ structures and performed at the level of expert chemists.

Source: https://www.computerhistory.org/timeline/1965/#169ebbe2ad45559efbc6eb357200f3cc

Another significant expert system was MYCIN, developed in 1972 to diagnose blood infections. MYCIN used around 500 rules to diagnose and recommend treatments, often performing at the level of specialists in the field.

The Knowledge Base and Inference Engine

An expert system consists of a knowledge base (KB) and an inference engine. The KB contains rules derived from expert knowledge, while the inference engine uses these rules to conclude. Some systems employed fuzzy logic, allowing them to handle vague or uncertain information.

Despite their success, expert systems had limitations, such as lacking common sense. They could misinterpret data outside their expertise and might even act on clerical errors.

The CYC Project: Symbolic AI's Ambitious Experiment

In 1984, the CYC Project was launched by the Microelectronics and Computer Technology Corporation (MCC), to create a knowledge base containing a significant portion of human commonsense knowledge. Over the years, millions of rules were coded into CYC, allowing it to make inferences based on real-world information.

Despite its vast knowledge base, CYC faced the "frame problem"—the difficulty of updating and searching large sets of information in realistic amounts of time. This issue has been a significant challenge for symbolic AI systems.

Connectionism: The Emergence of Neural Networks

Early Theories on Neural Networks

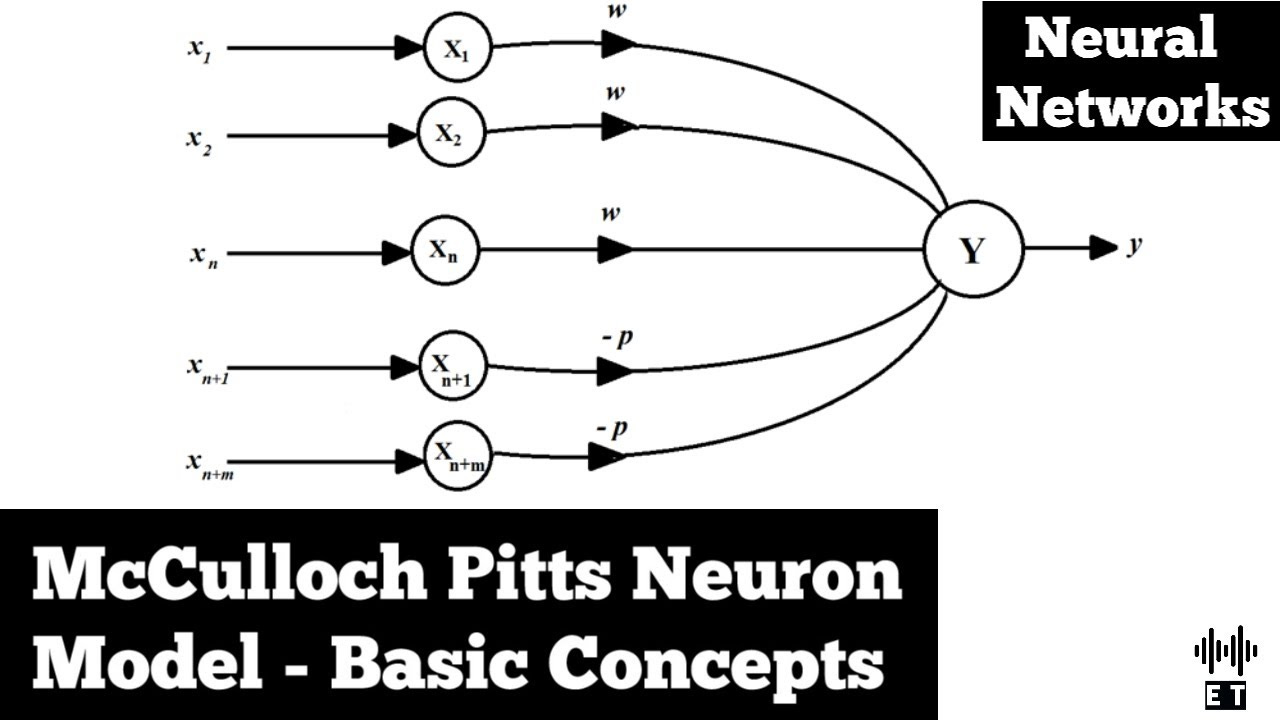

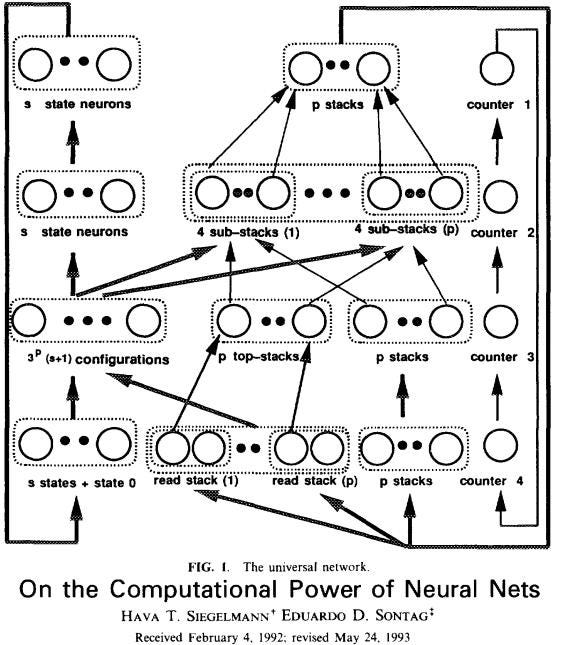

Connectionism, or neuron-like computing, originated from efforts to understand how the brain functions at the neural level. In 1943, Warren McCulloch and Walter Pitts published a paper suggesting that neurons in the brain act as simple digital processors, leading to the concept of artificial neural networks.

Source: https://www.vrogue.co/post/the-mcculloch-and-pitts-1943-model-of-a-single-neuron-adapted-from

Early Neural Network Models

The first artificial neural network was created in 1954 by Belmont Farley and Wesley Clark at MIT. Their network could recognize simple patterns, and they discovered that even when some neurons were destroyed, the network's performance remained unaffected, much like the brain's resilience.

Perceptrons and Backpropagation

In 1957, Frank Rosenblatt developed the perceptron, an early neural network model capable of learning tasks. Rosenblatt also introduced the concept of backpropagation, a technique used to train multilayer networks, which became crucial for modern neural network development.

Source: https://news.cornell.edu/stories/2019/09/professors-perceptron-paved-way-ai-60-years-too-soon

Neural Networks in Learning and Generalization

In 1986, David Rumelhart and James McClelland conducted an experiment where they trained a neural network to form the past tenses of English verbs. The network successfully generalized this learning to unfamiliar verbs, showcasing the potential of neural networks for complex tasks like language processing.

Machine Learning and Data-Driven Approaches (1990s)

The 1990s marked a significant shift in artificial intelligence towards machine learning. During this decade, algorithms began to learn from data using techniques like neural networks, decision trees, and support vector machines, allowing machines to recognize patterns and make predictions.

Source: https://www.scaruffi.com/mind/ai1990.html

Neural networks, inspired by the human brain, became popular for tasks such as speech recognition and movie recommendations, showcasing their effectiveness in handling complex data.

The increase in computer processing power and data availability fueled the growth of data-driven AI, leading to new applications like recommendation systems and natural language processing (NLP). This era set the foundation for future advancements, enabling AI to excel in various domains, particularly in speech recognition and stock prediction.

The AI Boom: Deep Learning and Neural Networks (2000s-2010s)

The early 21st century marked the rise of deep learning, a part of machine learning that mimics how the human brain works. From the 2000s to 2010s, deep learning became very important, with deep neural networks showing great success in areas like image recognition, natural language processing, and gaming. Their layered design allowed them to identify complex patterns in data, which improved their ability to make accurate predictions.

Source: https://www.timetoast.com/timelines/the-history-of-ai-c859c4b5-f2e6-4a51-b8e0-4cd422ef4598

Deep learning also led to big improvements in applications like speech recognition and computer vision. This transformed many industries by helping machines better understand and interact with human language and images.

During this time, major tech companies like Facebook, Google, and OpenAI invested heavily in AI research, speeding up the development of deep learning technologies. Their support helped create new algorithms that are essential for today’s AI systems.

The development of artificial neural networks, which are designed to work like connected neurons in the brain, was key to advancing deep learning. These networks became the foundation of many AI applications, enabling them to handle and understand large amounts of data more effectively.

Generative Pre-trained Transformers: A New Era (GPT Series)

The introduction of Generative Pre-trained Transformers (GPT) has changed the game in AI language models, especially with models like GPT-4. These models are trained on a lot of text data, which allows them to write in a way that feels human and understand complex language.

GPT-4 has made great strides in creating clear and relevant text. It can help with tasks like writing and translating languages. Because it learns from so much text, it understands grammar, meaning, and the subtle details of human language.

Source: Openai.com

These models are more than just translation tools. they can also create essays, poems, and other creative writing. This shows how AI can play a meaningful role in both creative and professional writing.

The newest models, such as Bard, ChatGPT, and Bing Copilot, highlight what generative models can do. They can write, translate, and generate original content, showcasing the exciting potential of AI in creating content and handling complex language tasks.

Final Thoughts

The history of AI is filled with amazing achievements, starting from Turing's early theories to the rise of neural networks and expert systems. Each step has brought us closer to building machines that can think, learn, and solve problems. As AI keeps growing, it has the potential to change industries and impact our future significantly.

Despite the progress, there are still challenges to overcome, like the frame problem, developing general intelligence, and addressing ethical issues. However, the drive for innovation and curiosity in AI continues to push the limits of what machines can do.

As AI explores new possibilities, we can't help but wonder what the future holds and how the relationship between human intelligence and machine learning will shape our world.