The Imperative of Responsible AI

AI systems must be developed and deployed with a keen awareness of their ethical implications. Responsible AI is not just a buzzword;

In this article, we will explore the core principles of responsible AI, the challenges we face in its implementation, and the frameworks that can guide us towards a future where AI serves the common good. As we delve into these topics, we aim to foster a deeper understanding of how we can harness the potential of AI while mitigating risks and ensuring equitable outcomes for all.

Let’s start with a story that resonates with why we need Responsible AI.

Steve Beauchamp was a man in his 80s with a simple wish: to secure his family's future. Little did he know that what seemed like a golden opportunity would turn into a nightmare costing $690,000, every last cent gone without a trace—thanks to the most sophisticated digital scam of our time.

The scammers had taken a genuine interview of Elon Musk and manipulated it using advanced AI tools. With incredible precision, they replaced his voice and altered his mouth movements to perfectly match their script, making it seem like Musk was offering an exclusive investment deal. Steve wasn’t alone.

Across the world, thousands of people were falling victim to similar scams, all powered by AI-generated deepfake videos of Musk, Warren Buffett and Jeff Bezos pushing fraudulent schemes. Steve Beauchamp’s story is a chilling reminder of how far AI has come—and the need for responsible AI.What’s Responsible AI?

Artificial Intelligence (AI) is when computers or machines are designed to think and learn like humans. Instead of just following instructions, AI can analyze data, recognize patterns, and make decisions on its own.

Responsible AI means making sure that artificial intelligence (AI) is used in a way that’s safe, fair, and ethical. It’s about ensuring that AI systems work as intended, without harming people or causing unintended problems.

This includes avoiding biased decisions (like treating certain groups unfairly), keeping personal data private, and being transparent about how AI works.

For example, if a company uses AI to approve loans, responsible AI would ensure the system doesn’t unfairly reject people based on their race, gender, or background. It also means that AI systems should have human oversight, so someone can step in if things go wrong.

Why does Responsible AI matter?

As AI becomes more integrated into our daily lives, the potential for misuse and harm grows. Here are a few reasons why responsible AI is essential:

Fairness

AI systems can reflect or amplify human biases. For example, a hiring algorithm trained on historical hiring data may favor certain demographics over others, leading to discriminatory hiring practices.

In 2018, it was revealed that Amazon scrapped an AI recruiting tool because it was biased against women, as the system was trained on resumes submitted to the company over a decade, which were predominantly from men.

Transparency

Many AI systems operate like a "black box," making it hard to understand how decisions are made. For instance, if an AI system denies a loan application, the applicant may not know why their application was rejected. This lack of transparency can lead to mistrust.

Efforts like the "Explainable AI" initiative aim to provide clarity on AI decision-making processes, ensuring individuals understand how their data is used and how decisions are reached.

Privacy

AI often relies on vast amounts of personal data, raising privacy concerns. For example, facial recognition technology used by law enforcement has come under scrutiny for its potential to invade privacy and surveil individuals without their consent.

In 2019, San Francisco became the first major city to ban the use of facial recognition by city agencies, highlighting the need for responsible data practices.

Accountability

If an AI system causes harm — such as a self-driving car accident—determining accountability can be challenging. In 2018, a self-driving Uber vehicle struck and killed a pedestrian. The incident raised questions about who is responsible: the car manufacturer, the software developers, or the operator? Responsible AI frameworks advocate for clear accountability measures to address such scenarios.

Inclusivity

Responsible AI aims to serve diverse populations. For example, facial recognition technology has trouble identifying people with darker skin. Studies have shown that it can misidentify darker-skinned women (⅓ of the times) much more often than lighter-skinned men, which can lead to unfair treatment.

Accessibility

Ensuring AI technologies are accessible to all, including individuals with disabilities, is crucial. For instance, voice-activated AI assistants can greatly enhance accessibility for those with mobility issues.

However, if these technologies only recognize standard accents or speech patterns, they may exclude non-native speakers or those with speech impairments. Companies like Microsoft have developed AI-driven accessibility tools, such as real-time captioning, to improve inclusivity.

Removing fear of human displacement

Using AI can take away repetitive jobs that humans used to do. Though some estimates say that millions of jobs could be lost to automation in the coming years, we also have new jobs demanding AI-integration that will emerge. Responsible learning and using AI help in sailing smoothly across the sea of evolution of jobs.

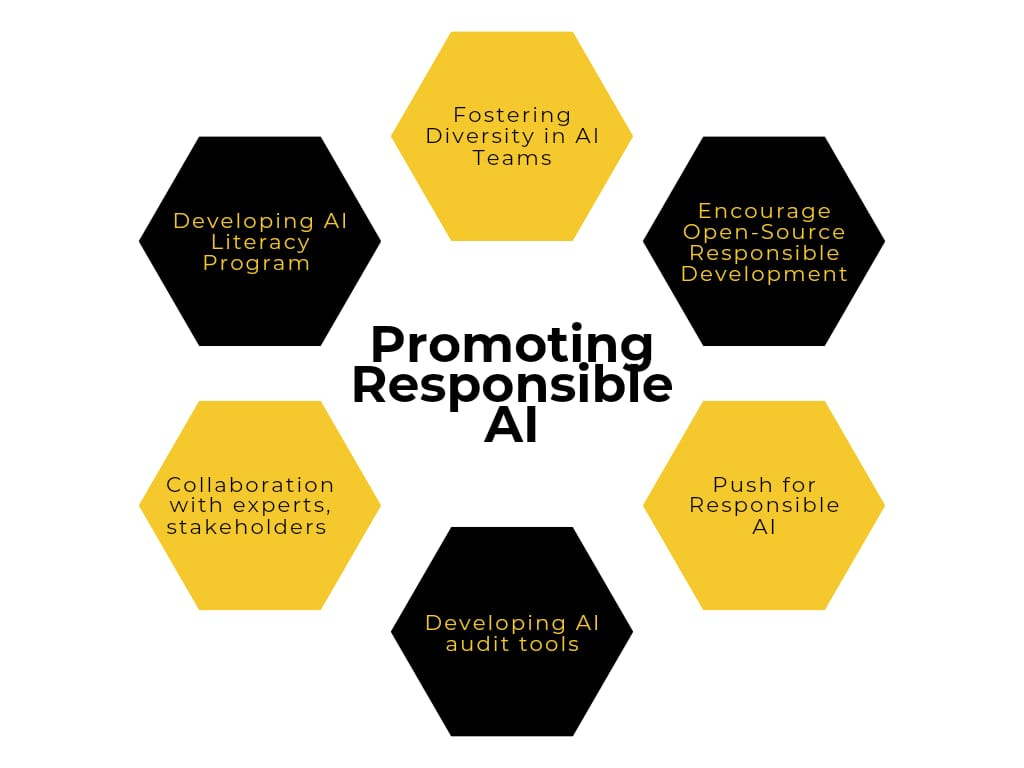

How Can We Promote Responsible AI?

Collaborate with Experts and Stakeholders

Work with AI researchers, ethicists, and industry professionals to advocate for responsible AI at a higher level. Join AI ethics groups or communities like the Partnership on AI or IEEE’s Global Initiative on Ethics of Autonomous and Intelligent Systems. These groups help shape guidelines for responsible AI use in various industries. You can contribute by sharing ideas, participating in discussions, or joining research efforts.

Push for Responsible AI in Your Organization

If you work in an industry that uses AI, promote responsible practices within your company or team. Propose the formation of an AI ethics board within your organization. This board can review AI projects to ensure they meet ethical standards like fairness, transparency, and privacy. Advocate for regular audits of AI systems to ensure they are functioning responsibly over time.

Develop AI Auditing Tools

Create or advocate for tools that can automatically audit AI systems for bias, fairness, and transparency. If you’re technically skilled, work with data scientists or developers to design AI tools that scan systems for bias. If you’re not technical, collaborate with experts to propose using existing solutions, like Fairness Indicators from Google or IBM’s AI Fairness 360, which help evaluate AI systems for fairness and accountability.

Encourage Open-Source Responsible AI Development

Push for the development of open-source AI tools that incorporate ethics and transparency by design. Support or contribute to open-source AI initiatives like TensorFlow or PyTorch, advocating for transparency in their algorithms. Help ensure that open-source AI platforms have built-in features that monitor for bias, promote fairness, and include ethical guidelines in their documentation.

Foster Diversity in AI Teams

Create or support initiatives that increase diversity in AI development teams, which can help reduce bias and create more inclusive AI systems.Establish a diversity-focused hiring program in your organization or fund scholarships for underrepresented groups in AI and tech fields. Partner with educational institutions or organizations like Black Girls Code or AI4All to promote diversity in tech and AI.

Develop AI Literacy Programs

Increase public understanding of AI by creating educational programs that explain the importance of responsible AI. Partner with universities, NGOs, or tech companies to launch AI literacy initiatives. These programs can teach communities about how AI works, its ethical implications, and how to engage with it responsibly. You could also create webinars or workshops aimed at non-tech audiences to build awareness around AI ethics.

Collaborate with Academic Institutions

Support research initiatives that focus on the ethical use of AI. Partner with universities or research labs to fund or contribute to studies on the long-term societal impact of AI. You could sponsor research grants for AI ethics or collaborate on projects that test AI in real-world settings to identify issues early and propose responsible solutions.

Final words…

As we explore the world of AI, it’s important to remember that this isn’t a complete list. AI technology is always changing, and our approach to using it responsibly needs to keep up. We have to stay aware and flexible, making sure our guidelines and practices adapt to these changes. By working together and prioritizing fairness and openness, we can create a future where AI improves our lives in a way that benefits everyone. Let’s all commit to making responsible AI a reality!