AI Myths: Fact vs. Fiction

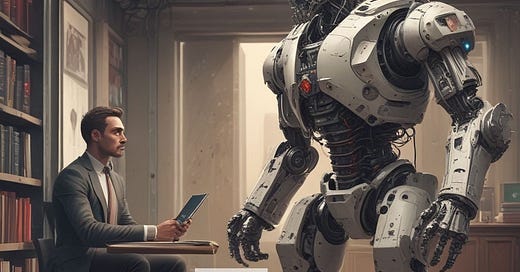

Explore the truth behind common AI myths. From job fears to AI's "objectivity," we debunk misconceptions and reveal what AI can—and can't—do.

In just ten months—from June 2022 to March 2023—the search volume for 'AI' has skyrocketed from 7.9 million to a staggering 30.4 million monthly searches. This explosive growth isn’t just a passing trend; it’s a reflection of society’s increasing curiosity and concern about artificial intelligence.

Along with this surge in interest, myths and misconceptions about AI have spread just as quickly. A 2023 Forbes Advisor Survey found that 77% of respondents expressed concern that AI could lead to job losses within the next year, with 44% feeling very concerned. While AI undoubtedly impacts industries and workflows, the truth is often more nuanced than the myths and headlines suggest.

So, what’s fact, and what’s fiction? Let’s dive into some of the biggest myths surrounding AI to separate reality from the hype.

Busting 7 Common Myths About AI: What It Can (and Can't) Do

Myth: AI Will Soon Take Over All Jobs

Reality: While AI automates some tasks, most jobs require uniquely human qualities—creativity, emotional intelligence, complex decision-making—that AI simply can’t replicate. Yes, we’re seeing automation, but AI is more likely to redefine jobs rather than eliminate them. For example, data analysts may shift to roles that manage and improve AI systems rather than analyzing raw data themselves.

Did ATMs replace bank tellers? Not entirely. Just like then, new roles are evolving around AI, focusing on its oversight and improvement.

Myth: AI Systems Are Completely Objective and Unbiased

Reality: AI inherits biases from the data it’s trained on. If the data carries historical biases, the AI system can produce biased outcomes, especially in areas like hiring, criminal justice, and lending. That’s why we need diverse datasets and ethical AI practices to minimize bias.

For example, an AI recruiting tool trained on past resumes could be biased against certain demographics if historical data reflects biased hiring practices. Bias-free AI is still a work in progress.

Myth: AI Can “Think” and “Feel” Like Humans

Reality: AI has no consciousness, emotions, or understanding. It can process data patterns, but it doesn’t “understand” them in the human sense. For instance, AI can translate languages by identifying patterns, but it doesn’t understand the cultural nuances of those languages.

AI lacks the contextual awareness and intuition that humans bring to problem-solving. AI is more like a tool—a powerful one—but it’s not a substitute for human intelligence.

Myth: AI Will Develop Human-Like Intuition and Creativity

Reality: While AI can mimic certain creative tasks—like generating music or art—it lacks true creativity. It generates outputs based on algorithms and data but lacks the emotional intent and vision that humans bring. AI can “compose” music based on patterns, but it doesn’t capture the soul behind a melody.

Artists and designers may find AI helpful in creating drafts, but the magic of creativity still rests with humans.

Myth: Only Tech Experts Need to Understand AI

Reality: AI affects more areas of life than we might realize—finance, healthcare, education, and entertainment. Basic AI literacy can empower everyone to make informed decisions about its benefits and risks. Knowing what AI can do (and what it can’t) helps consumers, policymakers, and business leaders to understand its impact.

Why Does It Matter? AI is shaping the future for everyone, so a little knowledge goes a long way in making smart choices—whether it’s privacy on social media or understanding your doctor’s AI-assisted diagnosis.

Myth: AI Is Infallible and Makes Fewer Mistakes Than Humans

Reality: AI systems make errors, especially when trained on flawed data or facing unpredictable situations. AI is far from perfect and needs human oversight to avoid potentially harmful mistakes, especially in high-stakes applications like medical diagnostics or legal decisions.

Just because an algorithm says so, doesn’t make it true. Human intuition and ethics still play a crucial role in AI-driven decisions.

Myth: AI Is a Recent Phenomenon

Reality: AI may feel new, but its research dates back to the 1935s. The recent surge is due to advancements in computing power, data availability, and more refined algorithms. AI’s history is one of gradual evolution, not sudden invention, from early attempts at chess-playing programs to today’s sophisticated AI-driven virtual assistants. Refer our previous post to explore evolution of AI

Bottom Line

AI is a transformative force, but it's far from all-knowing or all-powerful. The misconceptions surrounding AI can fuel unnecessary fears or lead to misplaced confidence in its abilities. By separating fact from fiction, we can harness AI responsibly, maximizing its benefits while minimizing potential risks. A clear understanding of what AI truly is—and isn’t—empowers us to make informed, balanced decisions as we navigate an increasingly AI-driven world. Embracing the reality of AI, rather than the myths, will help us build a future where humans and AI work in harmony. Our previous post Responsible AI covers more in details